Building an Interactive Pokédex with LRU Cache (Part III)

8kb

Welcome to the final part of our LRU cache journey! In Part 1, we explored the theory and fundamental data structures. In Part 2, we implemented our cache in Rust and compiled it to WebAssembly. Now it's time for the fun part - putting our WebAssembly LRU cache to work in a practical, interactive application.

Using The Cache

I'm going to posit an interesting application of an LRU cache that includes Pokémon. Yes those things. Remember the Pokédex? It's pretty much a device that acts as an encyclopedia of Pokémon. But it only applies to ones you've caught or seen.

We are going to implement a special spec of a Pokédex.

The Spec

Since obviously the technology in the Pokémon universe is still stuck in the 60s1 our Pokédex can only hold a maximum of 10 Pokémon, using the Signetics 8-bit RAM chip.

As we explore Pokémon, we want to make wise choices on how we store and get rid of Pokémon once we find more. Let's say that Pokémon that we look up a lot are more important than ones we don't. In other words we want to scrap the Pokémon accessed the least.

The LRU Cache seems like a perfect data structure to use behind the scenes of our Pokédex.

Implementation

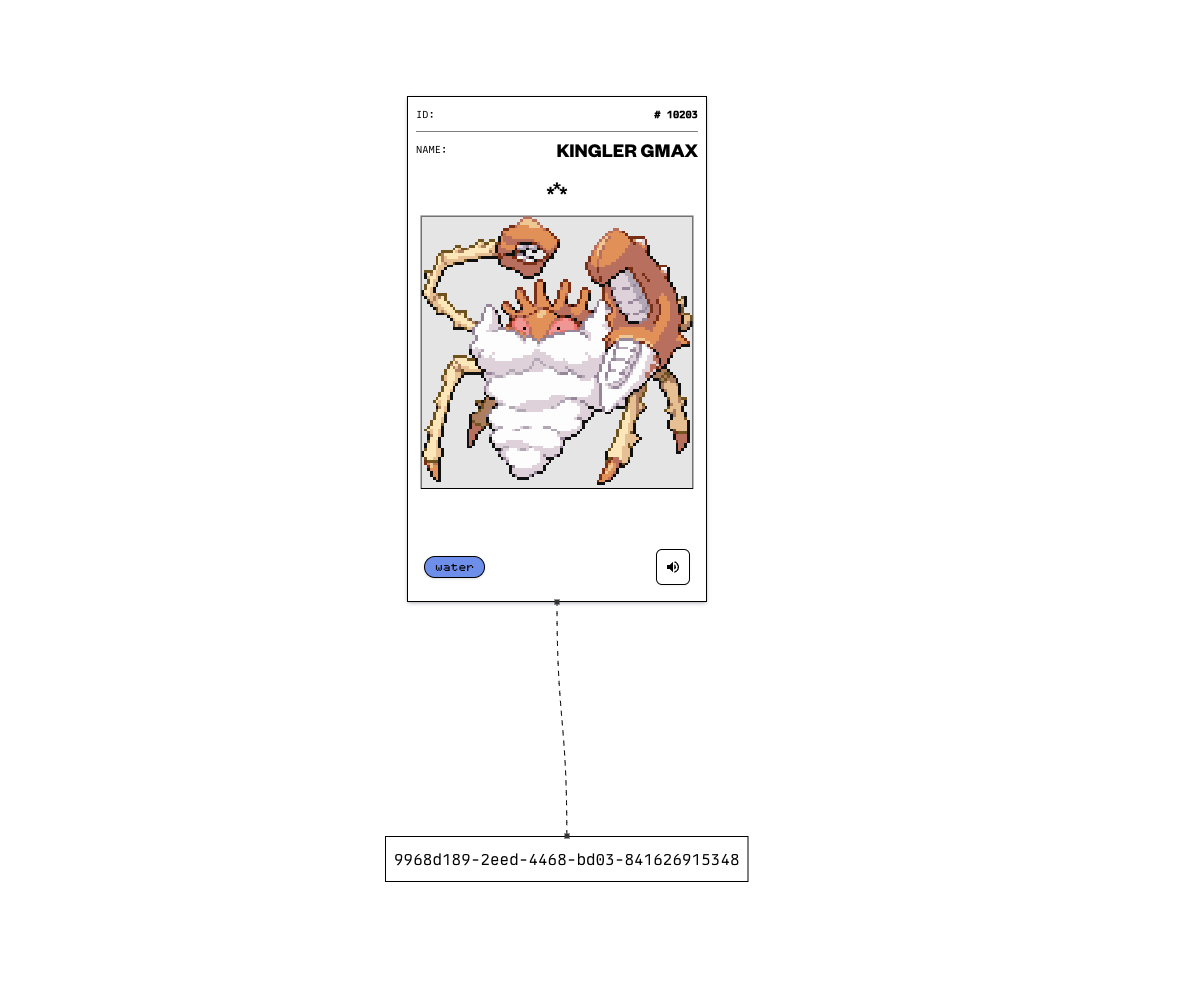

We will need a way to represent this problem in terms of an LRU cache. I suppose we would need a way to map a Pokmeon to an item in the cache. One way to do this is to assign a uuid and map that to a Pokémon. The map then has keys as uuids that point to the underlying data structure that contains the Pokémon. It would like something like this:

Great! Then our cache can handle the size by setting it's capacity and also the eviction of Pokémon we don't really view that much. Cool, but what does it look like in action?

Pokédex

I decided to build this React component Pokédex using the wonderful react-flow library2 to model what the inside of this Pokédex might look like. This is an MDX component that is running the wasm module we built earlier as the LRU cache along with some other React magic.

Try play around with it and see what happens! You can adjust the cache size, explore Pokémon, and retrieve the Pokémon. All these actions affect the underlying data structures3 so we can see how adding, accessing and removing Pokémon work in practice and in relation to the LRU cache. You can drag, zoom in and out, and move the nodes around.

It will4 obey all the rules of an LRU cache as you perform the actions above. Use it to learn and visualise what happens under the hood of an LRU cache.

As you can see, LRU caches have some real-life applications. Whilst Pokémon are arguably not real, building devices with constraints that match an LRU cache are.

Real-World Applications

While our Pokédex example is fun, LRU caches have numerous practical applications in computing:

Web Browser Caches

Browsers use LRU-like policies to manage cached web resources. When storage is full, the least recently accessed pages, images, and scripts are removed to make room for new content.

Database Buffer Pools

Database management systems use LRU caches to keep frequently accessed pages in memory. This dramatically improves query performance by reducing disk I/O operations.

Operating System Page Replacement

Operating systems employ LRU algorithms when managing virtual memory. When physical memory is full, the least recently used pages are moved to disk storage.

CPU Caches

Modern processors use variants of LRU algorithms in their cache hierarchies to decide which cache lines to evict when new data needs to be loaded.

Content Delivery Networks (CDNs)

CDNs use LRU policies to manage which content to keep cached at edge servers, ensuring popular content stays readily available.

Performance Considerations

Our implementation using a deque has some trade-offs:

Advantages:

- Simpler to implement in Rust than linked lists

- Good for educational purposes and moderate-sized caches

- Integrates well with WebAssembly

Disadvantages:

- O(n) complexity for updates due to index shifting

- Higher memory overhead for index management

For production systems with high performance requirements, you might consider:

- Using language-specific optimized implementations

- Approximate LRU algorithms like LRU-K or Clock

- Hardware-accelerated cache implementations

Lessons Learned

This project taught me several valuable lessons:

-

Choose the right tool for the job: Rust's ownership model makes certain data structures challenging to implement, but adapting the approach led to a working solution.

-

WebAssembly integration complexity: While powerful, integrating WebAssembly with modern web frameworks requires careful consideration of build processes and type safety.

-

Educational value of visualization: Building an interactive demo helped solidify understanding of how LRU caches behave in practice.

-

Real-world constraints drive design: The 60s-era Pokédex constraint provided a relatable context for understanding cache capacity limits.

The End

This project took a lot longer than I thought it would. I ran into loads of obstacles with Rust, Linked Lists, WebAssembly, Next.js and all the things we covered in this post. Was it frustrating as hell? Yes. But did I learn a lot from it? Also yes.

This is what software engineering is all about, banging your head against the wall and building something for the sake of it, and sharing it with the world. I hope this post inspires you to go build random stuff and reap the joy of figuring stuff out along the way.

Series Recap

Over this three-part series, we've covered:

- Part 1: The theoretical foundations of LRU caches and the data structures that power them

- Part 2: Implementing an LRU cache in Rust, tackling ownership challenges, and compiling to WebAssembly

- Part 3: Building a practical, interactive application that demonstrates LRU cache behavior

Whether you're working on system optimization, building web applications, or just curious about how caches work under the hood, I hope this series has provided valuable insights into both the theory and practice of LRU caches.

Contact

If you have any comments or thoughts you can reach me at any of the places below.

***

Footnotes

A big thanks to Deirdre Murray-Wallace, Joe Eid, Spike Jensen and Martin Rabone for reviewing this post and making it better.